Chapter 9 - Pragmatic Projects

As your project gets under way, we need to move away from issues of individual philosophy and coding to talk about larger, project-sized issues. We aren't going to go into specifics of project management, but we will talk about a handful of critical areas that can make or break any project.

As soon as you have more than one person working on a project, you need to establish some ground rules and delegate parts of the project accordingly. In Topic 49, Pragmatic Teams, we'll show how to do this while honoring the Pragmatic philosophy.

The purpose of a software development method is to help people work together. Are you and your team doing what works well for you, or are you only investing in the trivial surface artifacts, and not getting the real benefits you deserve? We'll see why Topic 50, Coconuts Don't Cut It and offer the true secret to success.

And of course none of that matters if you can't deliver software consistently and reliably. That's the basis of the magic trio of version control, testing, and automation: the Topic 51, Pragmatic Starter Kit.

Ultimately, though, success is in the eye of the beholder—the sponsor of the project. The perception of success is what counts, and in Topic 52, Delight Your Users we'll show you how to delight every project's sponsor.

The last tip in the book is a direct consequence of all the rest. In Topic 53, Pride and Prejudice, we ask you to sign your work, and to take pride in what you do.

Topic 49. Pragmatic Teams

At Group L, Stoffel oversees six first-rate programmers, a managerial challenge roughly comparable to herding cats.

The Washington Post Magazine, June 9, 1985

Even in 1985, the joke about herding cats was getting old. By the time of the first edition at the turn of the century, it was positively ancient. Yet it persists, because it has a ring of truth to it. Programmers are a bit like cats: intelligent, strong willed, opinionated, independent, and often worshiped by the net.

So far in this book we've looked at pragmatic techniques that help an individual be a better programmer. Can these methods work for teams as well, even for teams of strong-willed, independent people? The answer is a resounding “yes!'' There are advantages to being a pragmatic individual, but these advantages are multiplied manyfold if the individual is working on a pragmatic team.

A team, in our view, is a small, mostly stable entity of its own. Fifty people aren't a team, they're a horde.[75] Teams where members are constantly being pulled onto other assignments and no one knows each other aren't a team either, they are merely strangers temporarily sharing a bus stop in the rain.

A pragmatic team is small, under 10-12 or so members. Members come and go rarely. Everyone knows everyone well, trusts each other, and depends on each other.

Tip 84: Maintain Small, Stable Teams

In this section we'll look briefly at how pragmatic techniques can be applied to teams as a whole. These notes are only a start. Once you've got a group of pragmatic developers working in an enabling environment, they'll quickly develop and refine their own team dynamics that work for them.

Let's recast some of the previous sections in terms of teams.

No Broken Windows

Quality is a team issue. The most diligent developer placed on a team that just doesn't care will find it difficult to maintain the enthusiasm needed to fix niggling problems. The problem is further exacerbated if the team actively discourages the developer from spending time on these fixes.

Teams as a whole should not tolerate broken windows—those small imperfections that no one fixes. The team must take responsibility for the quality of the product, supporting developers who understand the no broken windows philosophy we describe in Topic 3, Software Entropy, and encouraging those who haven't yet discovered it.

Some team methodologies have a “quality officer”—someone to whom the team delegates the responsibility for the quality of the deliverable. This is clearly ridiculous: quality can come only from the individual contributions of all team members. Quality is built in, not bolted on.

Boiled Frogs

Remember the apocryphal frog in the pan of water, back in Topic 4, Stone Soup and Boiled Frogs? It doesn't notice the gradual change in its environment, and ends up cooked. The same can happen to individuals who aren't vigilant. It can be difficult to keep an eye on your overall environment in the heat of project development.

It's even easier for teams as a whole to get boiled. People assume that someone else is handling an issue, or that the team leader must have OK'd a change that your user is requesting. Even the best-intentioned teams can be oblivious to significant changes in their projects.

Fight this. Encourage everyone to actively monitor the environment for changes. Stay awake and aware for increased scope, decreased time scales, additional features, new environments—anything that wasn't in the original understanding. Keep metrics on new requirements.[76] The team needn't reject changes out of hand—you simply need to be aware that they're happening. Otherwise, it'll be you in the hot water.

Schedule Your Knowledge Portfolio

In Topic 6, Your Knowledge Portfolio we looked at ways you should invest in your personal Knowledge Portfolio on your own time. Teams that want to succeed need to consider their knowledge and skill investments as well.

If your team is serious about improvement and innovation, you need to schedule it. Trying to get things done “whenever there's a free moment” means they will never happen. Whatever sort of backlog or task list or flow you're working with, don't reserve it for only feature development. The team works on more than just new features. Some possible examples include:

- Old Systems Maintenance

-

While we love working on the shiny new system, there's likely maintenance work that needs to be done on the old system. We've met teams who try and shove this work in the corner. If the team is charged with doing these tasks, then do them—for real.

- Process Reflection and Refinement

-

Continuous improvement can only happen when you take the time to look around, figure out what's working and not, and then make changes (see Topic 48, The Essence of Agility). Too many teams are so busy bailing out water that they don't have time to fix the leak. Schedule it. Fix it.

- New tech experiments

-

Don't adopt new tech, frameworks, or libraries just because “everyone is doing it,” or based on something you saw at a conference or read online. Deliberately vet candidate technologies with prototypes. Put tasks on the schedule to try the new things and analyze results.

- Learning and skill improvements

-

Personal learning and improvements are a great start, but many skills are more effective when spread team-wide. Plan to do it, whether it's the informal brown-bag lunch or more formal training sessions.

Tip 85: Schedule It to Make It Happen

Communicate Team Presence

It's obvious that developers in a team must talk to each other. We gave some suggestions to facilitate this in Topic 7, Communicate!. However, it's easy to forget that the team itself has a presence within the organization. The team as an entity needs to communicate clearly with the rest of the world.

To outsiders, the worst project teams are those that appear sullen and reticent. They hold meetings with no structure, where no one wants to talk. Their emails and project documents are a mess: no two look the same, and each uses different terminology.

Great project teams have a distinct personality. People look forward to meetings with them, because they know that they'll see a well-prepared performance that makes everyone feel good. The documentation they produce is crisp, accurate, and consistent. The team speaks with one voice.[77] They may even have a sense of humor.

There is a simple marketing trick that helps teams communicate as one: generate a brand. When you start a project, come up with a name for it, ideally something off-the-wall. (In the past, we've named projects after things such as killer parrots that prey on sheep, optical illusions, gerbils, cartoon characters, and mythical cities.) Spend 30 minutes coming up with a zany logo, and use it. Use your team's name liberally when talking with people. It sounds silly, but it gives your team an identity to build on, and the world something memorable to associate with your work.

Don't Repeat Yourselves

In Topic 9, DRY—The Evils of Duplication, we talked about the difficulties of eliminating duplicated work between members of a team. This duplication leads to wasted effort, and can result in a maintenance nightmare. “Stovepipe” or “siloed” systems are common in these teams, with little sharing and a lot of duplicated functionality.

Good communication is key to avoiding these problems. And by “good” we mean instant and frictionless.

You should be able to ask a question of team members and get a more-or-less instant reply. If the team is co-located, this might be as simple as poking your head over the cube wall or down the hall. For remote teams, you may have to rely on a messaging app or other electronic means.

If you have to wait a week for the team meeting to ask your question or share your status, that's an awful lot of friction.[78] Frictionless means it's easy and low-ceremony to ask questions, share your progress, your problems, your insights and learnings, and to stay aware of what your teammates are doing.

Maintain awareness to stay DRY.

Team Tracer Bullets

A project team has to accomplish many different tasks in different areas of the project, touching a lot of different technologies. Understanding requirements, designing architecture, coding for frontend and server, testing, all have to happen. But it's a common misconception that these activities and tasks can happen separately, in isolation. They can't.

Some methodologies advocate all sort of different roles and titles within the team, or create separate specialized teams entirely. But the problem with that approach is that it introduces gates and handoffs. Now instead of a smooth flow from the team to deployment, you have artificial gates where the work stops. Handoffs that have to wait to be accepted. Approvals. Paperwork. The Lean folks call this waste, and strive to actively eliminate it.

All of these different roles and activities are actually different views of the same problem, and artificially separating them can cause a boatload of trouble. For example, programmers who are two or three levels removed from the actual users of their code are unlikely to be aware of the context in which their work is used. They will not be able to make informed decisions.

With Topic 12, Tracer Bullets, we recommend developing individual features, however small and limited initially, that go end-to-end through the entire system. That means that you need all the skills to do that within the team: frontend, UI/UX, server, DBA, QA, etc., all comfortable and accustomed to working with each other. With a tracer bullet approach, you can implement very small bits of functionality very quickly, and get immediate feedback on how well your team communicates and delivers. That creates an environment where you can make changes and tune your team and process quickly and easily.

Tip 86: Organize Fully Functional Teams

Build teams so you can build code end-to-end, incrementally and iteratively.

Automation

A great way to ensure both consistency and accuracy is to automate everything the team does. Why struggle with code formatting standards when your editor or IDE can do it for you automatically? Why do manual testing when the continuous build can run tests automatically? Why deploy by hand when automation can do it the same way every time, repeatably and reliably?

Automation is an essential component of every project team. Make sure the team has skills at tool building to construct and deploy the tools that automate the project development and production deployment.

Know When to Stop Adding Paint

Remember that teams are made up of individuals. Give each member the ability to shine in their own way. Give them just enough structure to support them and to ensure that the project delivers value. Then, like the painter in Topic 5, Good-Enough Software, resist the temptation to add more paint.

Related Sections Include

- Topic 2, The Cat Ate My Source Code

- Topic 7, Communicate!

- Topic 12, Tracer Bullets

- Topic 19, Version Control

- Topic 50, Coconuts Don't Cut It

- Topic 51, Pragmatic Starter Kit

Challenges

-

Look around for successful teams outside the area of software development. What makes them successful? Do they use any of the processes discussed in this section?

-

Next time you start a project, try convincing people to brand it. Give your organization time to become used to the idea, and then do a quick audit to see what difference it made, both within the team and externally.

-

You were probably once given problems such as “If it takes 4 workers 6 hours to dig a ditch, how long would it take 8 workers?” In real life, however, what factors affect the answer if the workers were writing code instead? In how many scenarios is the time actually reduced?

-

Read The Mythical Man Month [Bro96] by Frederick Brooks. For extra credit, buy two copies so you can read it twice as fast.

Topic 50. Coconuts Don't Cut It

The native islanders had never seen an airplane before, or met people such as these strangers. In return for use of their land, the strangers provided mechanical birds that flew in and out all day long on a “runway,” bringing incredible material wealth to their island home. The strangers mentioned something about war and fighting. One day it was over and they all left, taking their strange riches with them.

The islanders were desperate to restore their good fortunes, and re-built a facsimile of the airport, control tower, and equipment using local materials: vines, coconut shells, palm fronds, and such. But for some reason, even though they had everything in place, the planes didn't come. They had imitated the form, but not the content. Anthropologists call this a cargo cult.

All too often, we are the islanders.

It's easy and tempting to fall into the cargo cult trap: by investing in and building up the easily-visible artifacts, you hope to attract the underlying, working magic. But as with the original cargo cults of Melanesia,[79] a fake airport made out of coconut shells is no substitute for the real thing.

For example, we have personally seen teams that claim to be using Scrum. But, upon closer examination, it turned out they were doing a daily stand up meeting once a week, with four-week iterations that often turned into six- or eight-week iterations. They felt that this was okay because they were using a popular “agile” scheduling tool. They were only investing in the superficial artifacts—and even then, often in name only, as if “stand up” or “iteration” were some sort of incantation for the superstitious. Unsurprisingly, they, too, failed to attract the real magic.

Context Matters

Have you or your team fallen in this trap? Ask yourself, why are you even using that particular development method? Or that framework? Or that testing technique? Is it actually well-suited for the job at hand? Does it work well for you? Or was it adopted just because it was being used by the latest internet-fueled success story?

There's a current trend to adopt the policies and processes of successful companies such as Spotify, Netflix, Stripe, GitLab, and others. Each have their own unique take on software development and management. But consider the context: are you in the same market, with the same constraints and opportunities, similar expertise and organization size, similar management, and similar culture? Similar user base and requirements?

Don't fall for it. Particular artifacts, superficial structures, policies, processes, and methods are not enough.

Tip 87: Do What Works, Not What's Fashionable

How do you know “what works”? You rely on that most fundamental of Pragmatic techniques:

Try it.

Pilot the idea with a small team or set of teams. Keep the good bits that seem to work well, and discard anything else as waste or overhead. No one will downgrade your organization because it operates differently from Spotify or Netflix, because even they didn't follow their current processes while they were growing. And years from now, as those companies mature and pivot and continue to thrive, they'll be doing something different yet again.

That's the actual secret to their success.

One Size Fits No One Well

The purpose of a software development methodology is to help people work together. As we discuss in Topic 48, The Essence of Agility, there is no single plan you can follow when you develop software, especially not a plan that someone else came up with at another company.

Many certification programs are actually even worse than that: they are predicated on the student being able to memorize and follow the rules. But that's not what you want. You need the ability to see beyond the existing rules and exploit possibilities for advantage. That's a very different mindset from “but Scrum/Lean/Kanban/XP/agile does it this way…” and so on.

Instead, you want to take the best pieces from any particular methodology and adapt them for use. No one size fits all, and current methods are far from complete, so you'll need to look at more than just one popular method.

For example, Scrum defines some project management practices, but Scrum by itself doesn't provide enough guidance at the technical level for teams or at the portfolio/governance level for leadership. So where do you start?

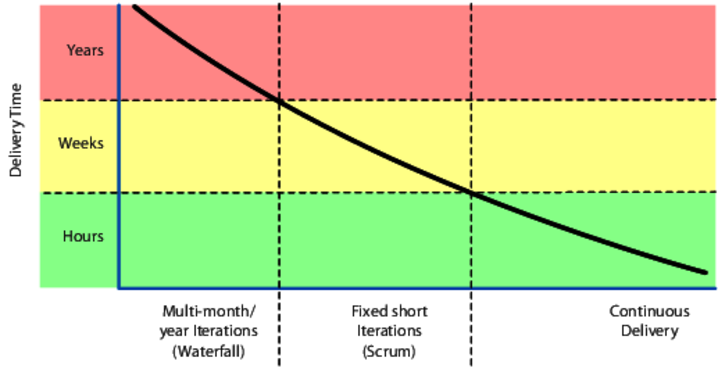

The Real Goal

The goal of course isn't to “do Scrum,” “do agile,” “do Lean,” or what-have-you. The goal is to be in a position to deliver working software that gives the users some new capability at a moment's notice. Not weeks, months, or years from now, but now. For many teams and organizations, continuous delivery feels like a lofty, unattainable goal, especially if you're saddled with a process that restricts delivery to months, or even weeks. But as with any goal, the key is to keep aiming in the right direction.

If you're delivering in years, try and shorten the cycle to months. From months, cut it down to weeks. From a four-week sprint, try two. From a two week sprint, try one. Then daily. Then, finally, on demand. Note that being able to deliver on demand does not mean you are forced to deliver every minute of every day. You deliver when the users need it, when it makes business sense to do so.

Tip 88: Deliver When Users Need It

In order to move to this style of continuous development, you need a rock-solid infrastructure, which we discuss in the next topic, Topic 51, Pragmatic Starter Kit. You do development in the main trunk of your version control system, not in branches, and use techniques such as feature switches to roll out test features to users selectively.

Once your infrastructure is in order, you need to decide how to organize the work. Beginners might want to start with Scrum for project management, plus the technical practices from eXtreme Programming (XP). More disciplined and experienced teams might look to Kanban and Lean techniques, both for the team and perhaps for larger governance issues.

But don't take our word for it, investigate and try these approaches for yourself. Be careful, though, in overdoing it. Overly investing in any particular methodology can leave you blind to alternatives. You get used to it. Soon it becomes hard to see any other way. You've become calcified, and now you can't adapt quickly anymore.

Might as well be using coconuts.

Related Sections Include

- Topic 12, Tracer Bullets

- Topic 27, Don't Outrun Your Headlights

- Topic 48, The Essence of Agility

- Topic 49, Pragmatic Teams

- Topic 51, Pragmatic Starter Kit

Topic 51. Pragmatic Starter Kit

Civilization advances by extending the number of important operations we can perform without thinking.

Alfred North Whitehead

Back when cars were a novelty, the instructions for starting a Model-T Ford were more than two pages long. With modern cars, you just push a button—the starting procedure is automatic and foolproof. A person following a list of instructions might flood the engine, but the automatic starter won't.

Although software development is still an industry at the Model-T stage, we can't afford to go through two pages of instructions again and again for some common operation. Whether it is the build and release procedure, testing, project paperwork, or any other recurring task on the project, it has to be automatic and repeatable on any capable machine.

In addition, we want to ensure consistency and repeatability on the project. Manual procedures leave consistency up to chance; repeatability isn't guaranteed, especially if aspects of the procedure are open to interpretation by different people.

After we wrote the first edition of The Pragmatic Programmer, we wanted to create more books to help teams develop software. We figured we should start at the beginning: what are the most basic, most important elements that every team needs regardless of methodology, language, or technology stack. And so the idea of the Pragmatic Starter Kit was born, covering these three critical and interrelated topics:

- Version Control

- Regression Testing

- Full Automation

These are the three legs that support every project. Here's how.

Drive with Version Control

As we said in Topic 19, Version Control, you want to keep everything needed to build your project under version control. That idea becomes even more important in the context of the project itself.

First, it allows build machines to be ephemeral. Instead of one hallowed, creaky machine in the corner of the office that everyone is afraid to touch,[80] build machines and/or clusters are created on demand as spot instances in the cloud. Deployment configuration is under version control as well, so releasing to production can be handled automatically.

And that's the important part: at the project level, version control drives the build and release process.

Tip 89: Use Version Control to Drive Builds, Tests, and Releases

That is, build, test, and deployment are triggered via commits or pushes to version control, and built in a container in the cloud. Release to staging or production is specified by using a tag in your version control system. Releases then become a much more low-ceremony part of every day life—true continuous delivery, not tied to any one build machine or developer's machine.

Ruthless and Continuous Testing

Many developers test gently, subconsciously knowing where the code will break and avoiding the weak spots. Pragmatic Programmers are different. We are driven to find our bugs now, so we don't have to endure the shame of others finding our bugs later.

Finding bugs is somewhat like fishing with a net. We use fine, small nets (unit tests) to catch the minnows, and big, coarse nets (integration tests) to catch the killer sharks. Sometimes the fish manage to escape, so we patch any holes that we find, in hopes of catching more and more slippery defects that are swimming about in our project pool.

Tip 90: Test Early, Test Often, Test Automatically

We want to start testing as soon as we have code. Those tiny minnows have a nasty habit of becoming giant, man-eating sharks pretty fast, and catching a shark is quite a bit harder. So we write unit tests. A lot of unit tests.

In fact, a good project may well have more test code than production code. The time it takes to produce this test code is worth the effort. It ends up being much cheaper in the long run, and you actually stand a chance of producing a product with close to zero defects.

Additionally, knowing that you've passed the test gives you a high degree of confidence that a piece of code is “done.''

Tip 91: Coding Ain't Done 'Til All the Tests Run

The automatic build runs all available tests. It's important to aim to “test for real,” in other words, the test environment should match the production environment closely. Any gaps are where bugs breed.

The build may cover several major types of software testing: unit testing; integration testing; validation and verification; and performance testing.

This list is by no means complete, and some specialized projects will require various other types of testing as well. But it gives us a good starting point.

Unit Testing

A unit test is code that exercises a module. We covered this in Topic 41, Test to Code. Unit testing is the foundation of all the other forms of testing that we'll discuss in this section. If the parts don't work by themselves, they probably won't work well together. All of the modules you are using must pass their own unit tests before you can proceed.

Once all of the pertinent modules have passed their individual tests, you're ready for the next stage. You need to test how all the modules use and interact with each other throughout the system.

Integration Testing

Integration testing shows that the major subsystems that make up the project work and play well with each other. With good contracts in place and well tested, any integration issues can be detected easily. Otherwise, integration becomes a fertile breeding ground for bugs. In fact, it is often the single largest source of bugs in the system.

Integration testing is really just an extension of the unit testing we've described—you're just testing how entire subsystems honor their contracts.

Validation and Verification

As soon as you have an executable user interface or prototype, you need to answer an all-important question: the users told you what they wanted, but is it what they need?

Does it meet the functional requirements of the system? This, too, needs to be tested. A bug-free system that answers the wrong question isn't very useful. Be conscious of end-user access patterns and how they differ from developer test data (for an example, see the story about brush strokes here).

Performance Testing

Performance or stress testing may be important aspects of the project as well.

Ask yourself if the software meets the performance requirements under real-world conditions—with the expected number of users, or connections, or transactions per second. Is it scalable?

For some applications, you may need specialized testing hardware or software to simulate the load realistically.

Testing the Tests

Because we can't write perfect software, it follows that we can't write perfect test software either. We need to test the tests.

Think of our set of test suites as an elaborate security system, designed to sound the alarm when a bug shows up. How better to test a security system than to try to break in?

After you have written a test to detect a particular bug, cause the bug deliberately and make sure the test complains. This ensures that the test will catch the bug if it happens for real.

Tip 92: Use Saboteurs to Test Your Testing

If you are really serious about testing, take a separate branch of the source tree, introduce bugs on purpose, and verify that the tests will catch them. At a higher level, you can use something like Netflix's Chaos Monkey[81] to disrupt (i.e., “kill”) services and test your application's resilience.

When writing tests, make sure that alarms sound when they should.

Testing Thoroughly

Once you are confident that your tests are correct, and are finding bugs you create, how do you know if you have tested the code base thoroughly enough?

The short answer is “you don't,'' and you never will. You might look to try coverage analysis tools that watch your code during testing and keep track of which lines of code have been executed and which haven't. These tools help give you a general feel for how comprehensive your testing is, but don't expect to see 100% coverage.[82]

Even if you do happen to hit every line of code, that's not the whole picture. What is important is the number of states that your program may have. States are not equivalent to lines of code. For instance, suppose you have a function that takes two integers, each of which can be a number from 0 to 999:

int test(int a, int b) {

return a / (a + b);

}

In theory, this three-line function has 1,000,000 logical states, 999,999 of which will work correctly and one that will not (when a + b equals zero). Simply knowing that you executed this line of code doesn't tell you that—you would need to identify all possible states of the program. Unfortunately, in general this is a really hard problem. Hard as in, “The sun will be a cold hard lump before you can solve it.”

Tip 93: Test State Coverage, Not Code Coverage

Property-Based Testing

A great way to explore how your code handles unexpected states is to have a computer generate those states.

Use property-based testing techniques to generate test data according to the contracts and invariants of the code under test. We cover this topic in detail in Topic 42, Property-Based Testing.

Tightening the Net

Finally, we'd like to reveal the single most important concept in testing. It is an obvious one, and virtually every textbook says to do it this way. But for some reason, most projects still do not.

If a bug slips through the net of existing tests, you need to add a new test to trap it next time.

Tip 94: Find Bugs Once

Once a human tester finds a bug, it should be the last time a human tester finds that bug. The automated tests should be modified to check for that particular bug from then on, every time, with no exceptions, no matter how trivial, and no matter how much the developer complains and says, “Oh, that will never happen again.”

Because it will happen again. And we just don't have the time to go chasing after bugs that the automated tests could have found for us. We have to spend our time writing new code—and new bugs.

Full Automation

As we said at the beginning of this section, modern development relies on scripted, automatic procedures. Whether you use something as simple as shell scripts with rsync and ssh, or full-featured solutions such as Ansible, Puppet, Chef, or Salt, just don't rely on any manual intervention.

Once upon a time, we were at a client site where all the developers were using the same IDE. Their system administrator gave each developer a set of instructions on installing add-on packages to the IDE. These instructions filled many pages—pages full of click here, scroll there, drag this, double-click that, and do it again.

Not surprisingly, every developer's machine was loaded slightly differently. Subtle differences in the application's behavior occurred when different developers ran the same code. Bugs would appear on one machine but not on others. Tracking down version differences of any one component usually revealed a surprise.

Tip 95: Don't Use Manual Procedures

People just aren't as repeatable as computers are. Nor should we expect them to be. A shell script or program will execute the same instructions, in the same order, time after time. It is under version control itself, so you can examine changes to the build/release procedures over time as well (“but it used to work…”).

Everything depends on automation. You can't build the project on an anonymous cloud server unless the build is fully automatic. You can't deploy automatically if there are manual steps involved. And once you introduce manual steps (“just for this one part…”) you've broken a very large window.[83]

With these three legs of version control, ruthless testing, and full automation, your project will have the firm foundation you need so you can concentrate on the hard part: delighting users.

Related Sections Include

- Topic 11, Reversibility

- Topic 12, Tracer Bullets

- Topic 17, Shell Games

- Topic 19, Version Control

- Topic 41, Test to Code

- Topic 49, Pragmatic Teams

- Topic 50, Coconuts Don't Cut It

Challenges

-

Are your nightly or continuous builds automatic, but deploying to production isn't? Why? What's special about that server?

-

Can you automatically test your project completely? Many teams are forced to answer “no.” Why? Is it too hard to define the acceptable results? Won't this make it hard to prove to the sponsors that the project is “done”?

-

Is it too hard to test the application logic independent of the GUI? What does this say about the GUI? About coupling?

Topic 52. Delight Your Users

When you enchant people, your goal is not to make money from them or to get them to do what you want, but to fill them with great delight.

Guy Kawasaki

Our goal as developers is to delight users. That's why we're here. Not to mine them for their data, or count their eyeballs or empty their wallets. Nefarious goals aside, even delivering working software in a timely manner isn't enough. That alone won't delight them.

Your users are not particularly motivated by code. Instead, they have a business problem that needs solving within the context of their objectives and budget. Their belief is that by working with your team they'll be able to do this.

Their expectations are not software related. They aren't even implicit in any specification they give you (because that specification will be incomplete until your team has iterated through it with them several times).

How do you unearth their expectations, then? Ask a simple question:

How will you know that we've all been successful a month (or a year, or whatever) after this project is done?

You may well be surprised by the answer. A project to improve product recommendations might actually be judged in terms of customer retention; a project to consolidate two databases might be judged in terms of data quality, or it might be about cost savings. But it's these expectations of business value that really count—not just the software project itself. The software is only a means to these ends.

And now that you've surfaced some of the underlying expectations of value behind the project, you can start thinking about how you can deliver against them:

-

Make sure everyone on the team is totally clear about these expectations.

-

When making decisions, think about which path forward moves closer to those expectations.

-

Critically analyze the user requirements in light of the expectations. On many projects we've discovered that the stated “requirement” was in fact just a guess at what could be done by technology: it was actually an amateur implementation plan dressed up as a requirements document. Don't be afraid to make suggestions that change the requirement if you can demonstrate that they will move the project closer to the objective.

-

Continue to think about these expectations as you progress through the project.

We've found that as our knowledge of the domain increases, we're better able to make suggestions on other things that could be done to address the underlying business issues. We strongly believe that developers, who are exposed to many different aspects of an organization, can often see ways of weaving different parts of the business together that aren't always obvious to individual departments.

Tip 96: Delight Users, Don't Just Deliver Code

If you want to delight your client, forge a relationship with them where you can actively help solve their problems. Even though your title might be some variation of “Software Developer” or “Software Engineer,” in truth it should be “Problem Solver.” That's what we do, and that's the essence of a Pragmatic Programmer.

We solve problems.

Related Sections Include

- Topic 12, Tracer Bullets

- Topic 13, Prototypes and Post-it Notes

- Topic 45, The Requirements Pit

Topic 53. Pride and Prejudice

You have delighted us long enough.

Jane Austen, Pride and Prejudice

Pragmatic Programmers don't shirk from responsibility. Instead, we rejoice in accepting challenges and in making our expertise well known. If we are responsible for a design, or a piece of code, we do a job we can be proud of.

Tip 97: Sign Your Work

Artisans of an earlier age were proud to sign their work. You should be, too.

Project teams are still made up of people, however, and this rule can cause trouble. On some projects, the idea of code ownership can cause cooperation problems. People may become territorial, or unwilling to work on common foundation elements. The project may end up like a bunch of insular little fiefdoms. You become prejudiced in favor of your code and against your coworkers.

That's not what we want. You shouldn't jealously defend your code against interlopers; by the same token, you should treat other people's code with respect. The Golden Rule (“Do unto others as you would have them do unto you'') and a foundation of mutual respect among the developers is critical to make this tip work.

Anonymity, especially on large projects, can provide a breeding ground for sloppiness, mistakes, sloth, and bad code. It becomes too easy to see yourself as just a cog in the wheel, producing lame excuses in endless status reports instead of good code.

While code must be owned, it doesn't have to be owned by an individual. In fact, Kent Beck's eXtreme Programming[84] recommends communal ownership of code (but this also requires additional practices, such as pair programming, to guard against the dangers of anonymity).

We want to see pride of ownership. “I wrote this, and I stand behind my work.” Your signature should come to be recognized as an indicator of quality. People should see your name on a piece of code and expect it to be solid, well written, tested, and documented. A really professional job. Written by a professional.

A Pragmatic Programmer.

Thank you.

[75]As team size grows, communication paths grow at the rate of $O(n^2)$, where $n$ is the number of team members. On larger teams, communication begins to break down and becomes ineffective.

[76]A burnup chart is better for this than the more usual burndown chart. With a burnup chart, you can clearly see how the additional features move the goalposts.

[77]The team speaks with one voice—externally. Internally, we strongly encourage lively, robust debate. Good developers tend to be passionate about their work.

[78]Andy has met teams who conduct their daily Scrum standups on Fridays.

[79]See https://en.wikipedia.org/wiki/Cargo_cult.

[80]We've seen this first-hand more times than you'd think.

[81]https://netflix.github.io/chaosmonkey

[82]For an interesting study of the correlation between test coverage and defects, see Mythical Unit Test Coverage [ADSS18].

[83]Always remember Topic 3, Software Entropy. Always.